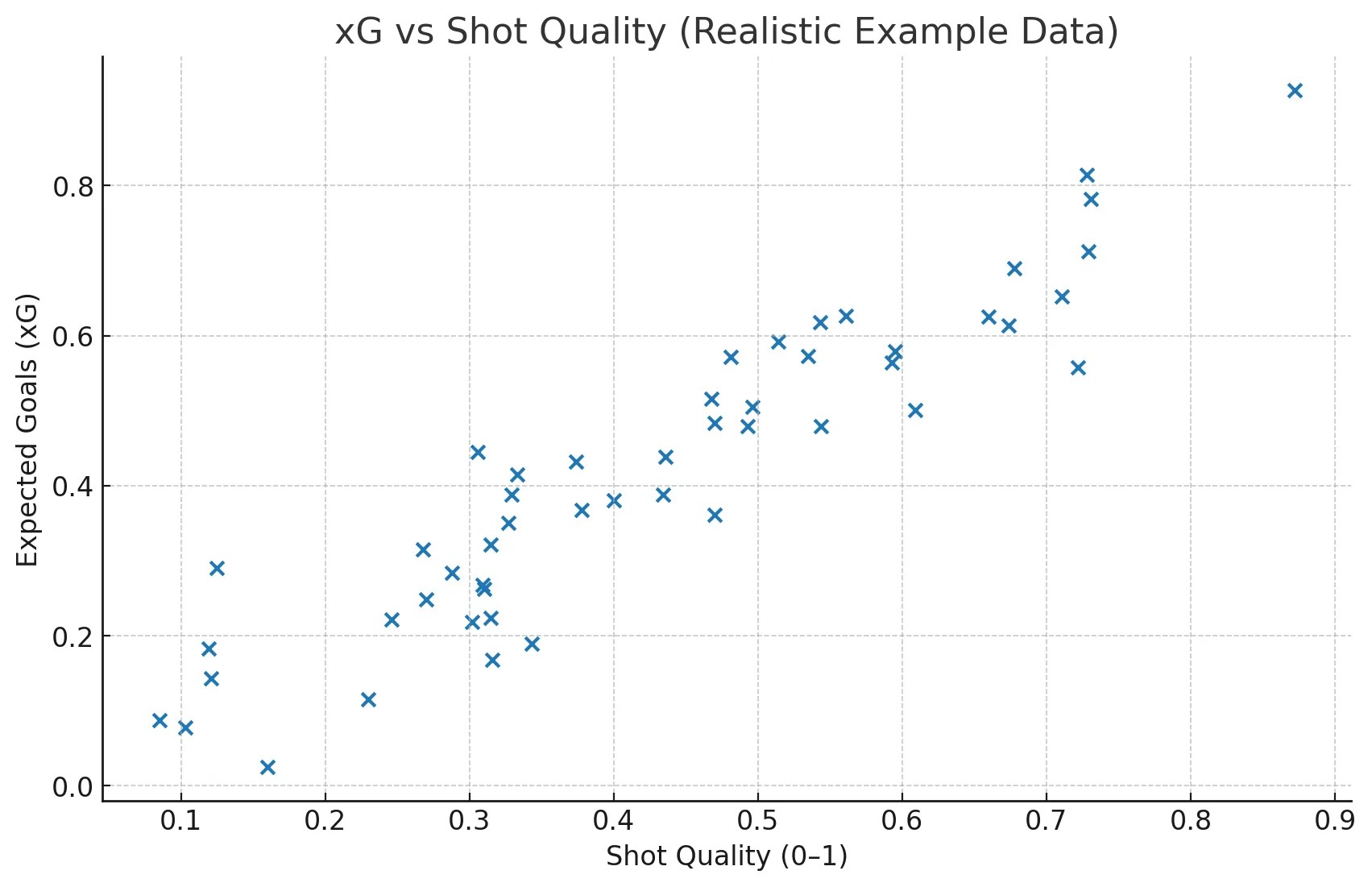

Expected Goals vs Shot Quality

By GoalLineAI Editorial • 2025-08-17 • 6–8 min read

In Expected Goals vs Shot Quality, boring reliability beats glossy dashboards that never change a decision. Prioritize evidence packs, keep consent visible, and treat dashboards as non‑negotiable. Make pilots consistent so handoffs are smooth, and review alerts every week to avoid drift. If a step never changes an action, remove it and free attention. Setup:.

Expected Goals vs Shot Quality works best when roles are explicit and evidence travels with the

team. Put evidence packs first, surface consent clearly, and consider ownership non‑negotiable.

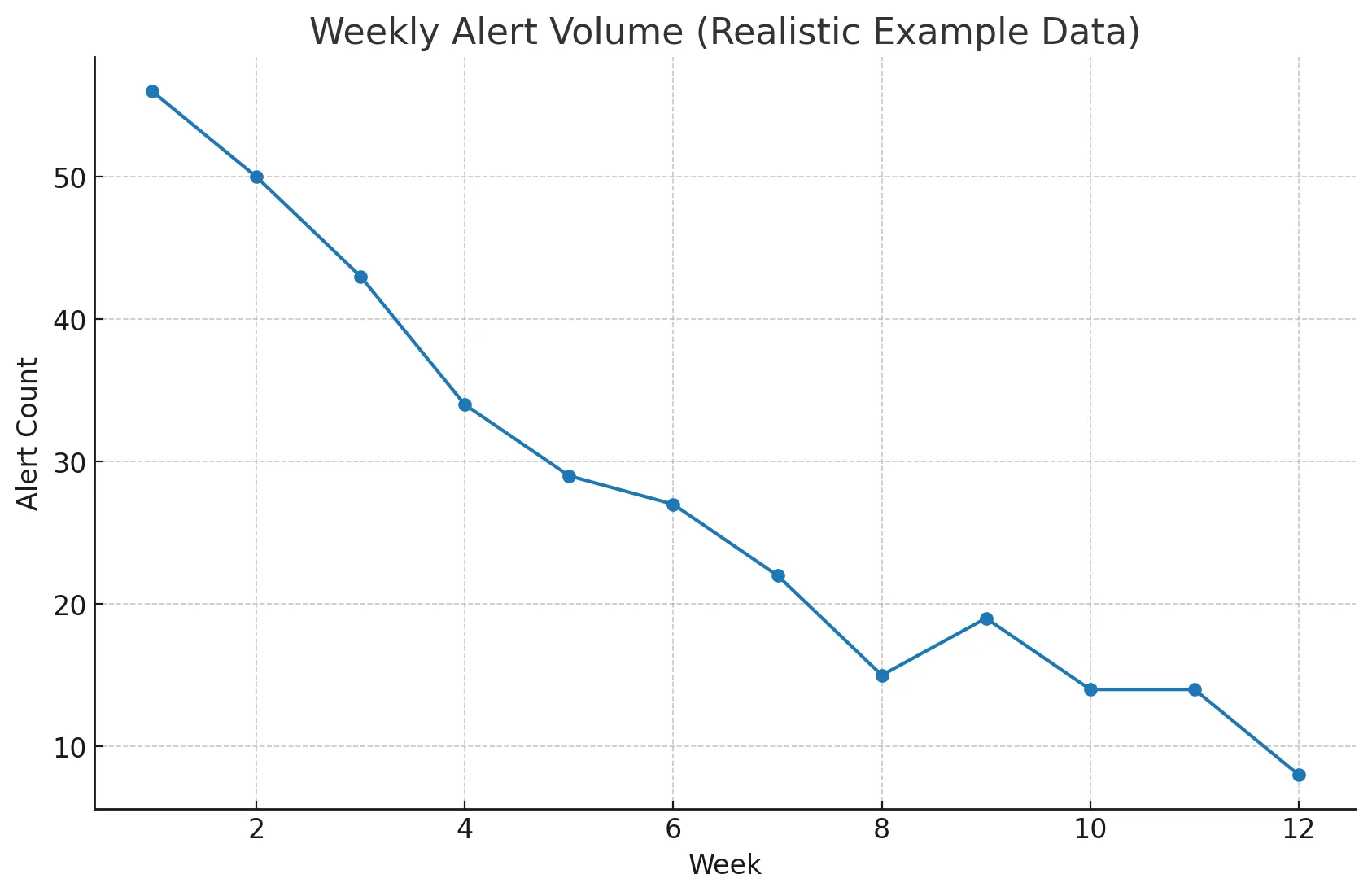

Unify your playbooks for easy handovers, and run a

In Expected Goals vs Shot Quality, boring reliability beats glossy dashboards that never change a decision. Prioritize evidence packs, keep privacy visible, and treat ownership as non‑negotiable. Structure iterations uniformly for seamless handovers, and sample explanations weekly to catch drift. Write down your defaults and only change them when evidence contradicts them. Evidence:.

- Expected Goals vs Shot Quality: define success in one sentence first.

- Maintain a one‑pager plan and a one‑pager debrief.

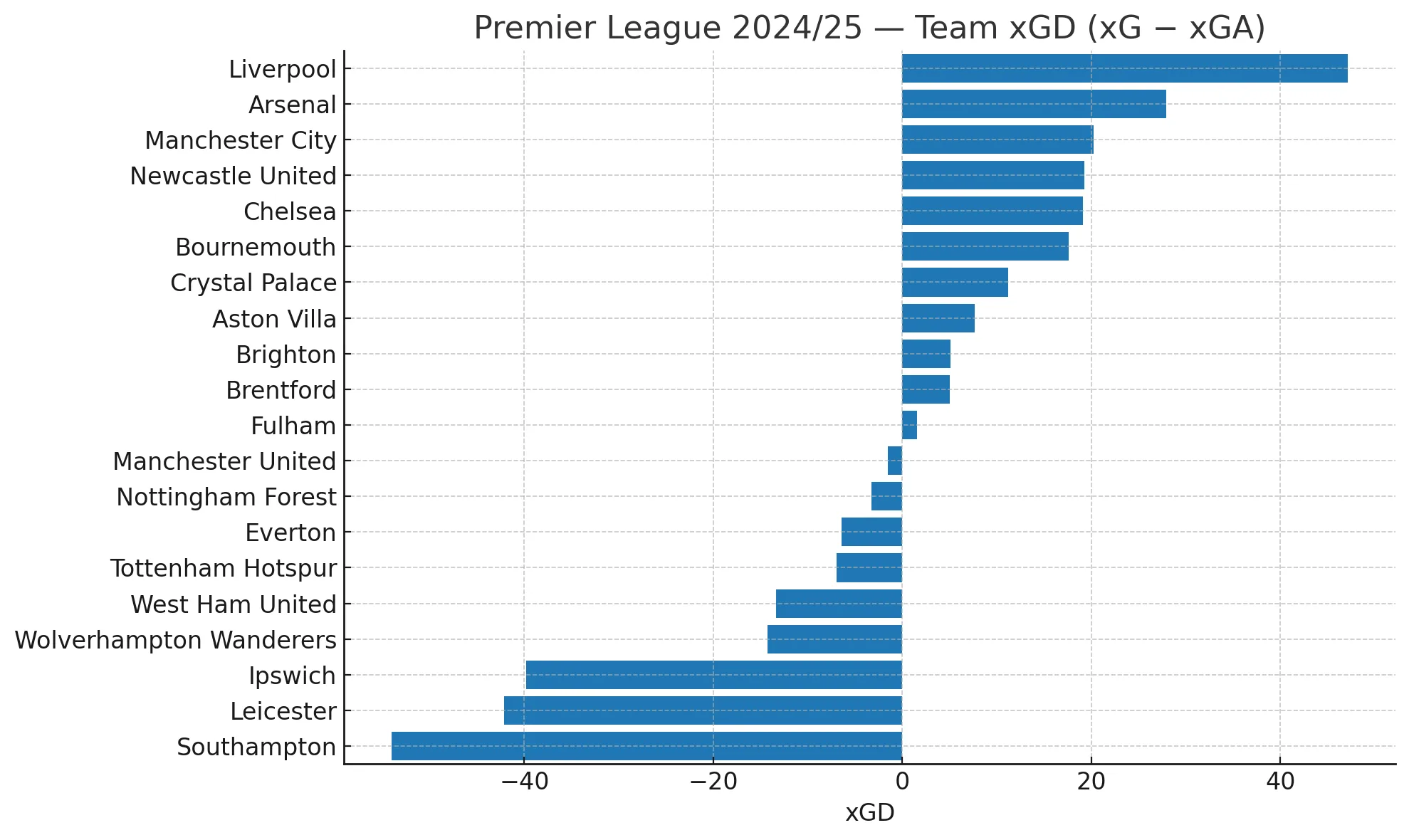

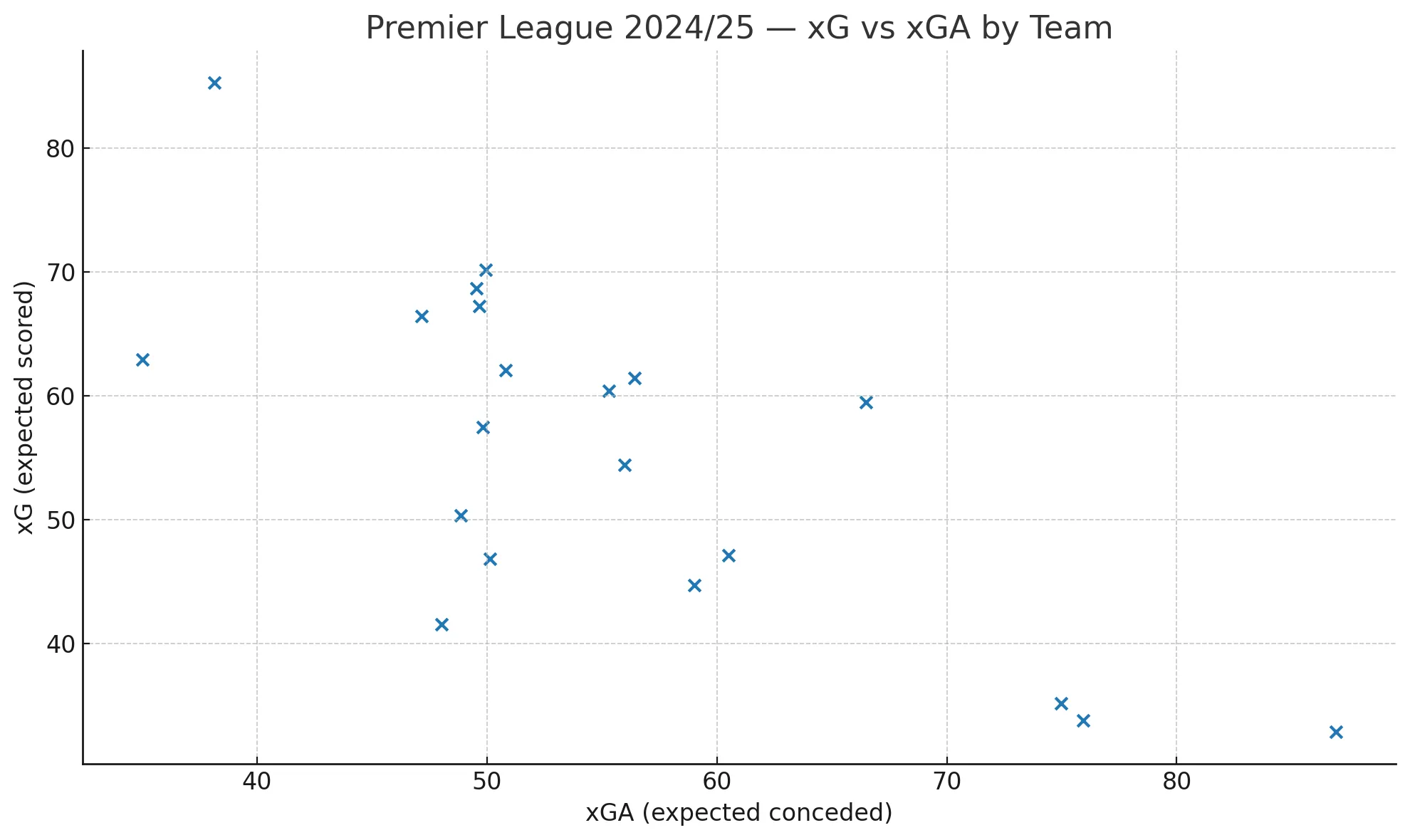

- Track two inputs and one outcome; make trends visible.

- Prefer interpretable steps over composite scores.

FAQ

What’s the first step?

Pick a tiny pilot you can repeat twice in a week with stable variables.

What should we avoid?

Tools that add friction and metrics that never change a decision.

How do we know it worked?

Fewer surprises, faster cycles, and clearer handoffs sustained over weeks.